Proxmox VE 6x to 7x 升級

Proxmox VE 已經釋放出新版的 7 版,改版內容如下或者連到官方看修改什麼內容 官方 wiki 公告內容

官方最新消息 Proxmox Virtual Environment 7 with Debian 11 “Bullseye” and Ceph Pacific 16.2 released

此版改版內容

- Based on Debian Bullseye (11)

- Ceph Pacific 16.2 as new default

- Ceph Octopus 15.2 continued support

- Kernel 5.11 default

- LXC 4.0

- QEMU 6.0

- ZFS 2.0.4

Changelog Overview

-

Installer:

- Rework the installer environment to use

switch_rootinstead ofchroot, when transitioning from initrd to the actual installer. This improves module and firmware loading, and slightly reduces memory usage during installation. - Automatically detect HiDPI screens, and increase console font and GUI scaling accordingly. This improves UX for workstations with Proxmox VE (for example, for passthrough).

- Improve ISO detection:

- Support ISOs backed by devices using USB Attached SCSI (UAS), which modern USB3 flash drives often do.

- Linearly increase the delay of subsequent scans for a device with an ISO image, bringing the total check time from 20s to 45s. This allows for the detection of very slow devices, while continuing faster in general.

- Use zstd compression for the initrd image and the squashfs images.

- Setup Btrfs as root file system through the Proxmox VE Installer (Technology preview)

- Update to busybox 1.33.1 as the core-utils provider.

- Rework the installer environment to use

-

Enhancements in the web interface (GUI):

- The node summary panel shows a high level status overview, while the separate Repository panel shows in-depth status and list of all configured repositories. Basic repository management, for example, activating or deactivating a repository, is also supported.

- Notes panels for Guests and Nodes can now interpret Markdown and render it as HTML.

- On manually triggered backups, you can now enable pruning with the backup-retention parameters of the target storage, if configured.

- The storage overview now uses SI units (base 10) to be consistent with the units used in the graphs.

- Support for security keys (like YubiKey) as SSH keys, when creating containers or preparing cloud-init images.

- Improved rendering for IOMMU-groups when adding passthrough PCI devices to QEMU guests.

- Improved translations, among others:

- Arabic

- French

- German

- Japan

- Polish

- Turkish

-

Access Control:

-

Single-Sign-On (SSO) with the new OpenID Connect access realm type.

You can integrate external authorization servers, either using existing public services or your own identity and access management solution, for example, Keycloack or LemonLDAP::NG.

-

Added new permission

Pool.Auditto allow users to see pools, without permitting them to change the pool.See breaking changes below for some possible impact in custom created roles.

-

-

Virtual Machines (KVM/QEMU):

-

QEMU 6.0 has support for

io_uringas an asynchronous I/O engine for virtual drives - this is now the default for newly started or migrated guests.The new default can be overridden in the guest config via

qm set VMID --DRIVE EXISTING-DRIVE-OPTS,aio=native(where, for example, DRIVE would bescsi0and the OPTS could be get fromqm config VMIDoutput). -

EFI disks stored on Ceph now use the

writebackcaching-mode, improving boot times in case of slower or highly-loaded Ceph storages. -

Unreferenced VM disks (not present in the configuration) are not destroyed automatically any more:

-

This was made opt-in in the GUI in Proxmox VE 6.4 and is now also opt-in in the API and with CLI tools.

-

Furthermore, if this clean-up option is enabled, only storages with content-types of VM or CT disk images, or rootdir will be scanned for unused disk-volumes.

With this new default value, data loss is also prevented by default. This is especially beneficial in cases of dangerous and unsupported configurations, for example, where one backing storage is added twice to a Proxmox VE cluster with an overlapping set of content-types.

-

-

VM snapshot states are now always removed when a VM gets destroyed.

-

Improved logging during live restore.

-

-

Container

- Support for containers on custom storages.

- Clone: Clear the cloned container's

/etc/machine-idwhen systemd is in use or that file exists. This ID must be unique, in order to prevent issues such as MAC address duplication on Linux bridges.

-

Migration

-

QEMU guests: The migration protocol for sending the Spice ticket changed in Proxmox VE 6.1. The code for backwards compatibility has now been dropped, prohibiting the migration of VMs from Proxmox VE 7.0 to hypervisors running Proxmox VE 6.1 and older.

Always upgrade to the latest Proxmox VE 6.4, before starting the upgrade to Proxmox VE 7.

-

Containers: The

forceparameter topct migrate, which enabled the migration of containers with bind mounts and device mounts, has been removed. Its functionality has been replaced by marking the respective mount-points asshared.

-

-

High Availability (HA):

-

Release LRM locks and disable watchdog protection if all services of the node the LRM is running on, got removed and no new ones were added for over 10 minutes.

This reduced the possible subtle impact of an active watchdog after a node was cleared of HA services, for example, when HA services were previously only configured for evaluation.

-

Add a new HA service state

recoveryand transform thefencestate in a transition to that new state.This gains a clear distinction between to be fenced services and the services whose node already got fenced and are now awaiting recovery.

-

Continuously retry recovery, even if no suitable node was found.

This improves recovery for services in restricted HA groups, as only with that the possibility of a quorate and working partition but no available new node for a specific service exists.

For example, if HA is used for ensuring that a HA service using local resource, like a VM using local storage, will be restarted and up as long as the node is running.

-

Allow manually disabling HA service that currently are in

recoverystate, for more admin control in those situations.

-

-

Backup and Restore

- Backups of QEMU guests now support encryption using a master key.

- It is now possible to back up VM templates with SATA and IDE disks.

- The maxfiles parameter has been deprecated in favor of the more flexible

prune-options. - vzdump now defaults to keeping all backups, instead of keeping only the latest one.

- Caching during live restore got reworked, reducing total restore time required and improving time to fully booted guest both significantly.

- Support file-restore for VMs using ZFS or LVM for one, or more, storages in the guest OS.

-

Network:

- Default to the modern

ifupdown2for new installations using the Proxmox VE official ISO. The legacyifupdownis still supported in Proxmox VE 7, but may be deprecated in a future major release.

- Default to the modern

-

Time Synchronization:

-

Due to the design limitations of

systemd-timesync, which make it problematic for server use, new installations will installchronyas the default NTP daemon.If you upgrade from a system using

systemd-timesyncd, it's recommended that you manually install eitherchrony,ntporopenntpd.

-

-

Ceph Server

-

Support for Ceph 16.2 Pacific

-

Ceph monitors with multiple networks can now be created using the CLI, provided you have multiple

public_networksdefined.Note that multiple

public_networksare usually not needed, but in certain deployments, you might need to have monitors in different network segments. -

Improved support for IPv6 and mixed setups, when creating a Ceph monitor.

-

Beginning with Ceph 16.2 Pacific, the balancer module is enabled by default for new clusters, leading to better distribution of placement groups among the OSDs.

-

Newly created Bluestore OSDs will benefit from the newly enabled sharding configuration for rocksdb, which should lead to better caching of frequently read metadata and less space needed during compaction.

-

-

Storage

- Support for Btrfs as technology preview

- Add an existing Btrfs file system as storage to Proxmox VE, using it for virtual machines, container, as backup target or to store and server ISO and container appliance images.

- The outdated, deprecated, internal DRBD Storage plugin has been removed. A derived version targeting newer DRBD is maintained by Linbit1.

- More use of content-type checks instead of checking a hard-coded storage-type list in various places.

- Support downloading ISO and Cont appliance images directly from a URL to a storage, including optional checksum verifications.

- Support for Btrfs as technology preview

-

Disk Management

-

Wiping disks is now possible from the GUI, enabling you to clear disks which were previously in use and create new storages on them. Note, wiping a disk is a destructive operation with data-loss potential.

Note that with using this feature any data on the disk will be destroyed permanently.

-

-

pve-zsync

- Separately configurable number of snapshots on source and destination, allowing you to keep a longer history on the destination, without the requirement to have the storage space available on the source.

-

Firewall

- The sysctl settings needed by pve-firewall are now set on every update to prevent disadvantageous interactions during other operations (for example package installations).

-

Certificate management

- The ACME standalone plugin has improved support for dual-stacked (IPv4 and IPv6) environments and no longer relies on the configured addresses to determine its listening interface.

Breaking Changes

-

Pool permissions

The old permission

Pool.Allocatenow only allows users to edit pools, not to see them. Therefore,Pool.Auditmust be added to existing custom roles with the old Pool.Allocate to preserve the same behavior. All built-in roles are updated automatically. -

VZDump

- Hookscript: The

TARFILEenvironment variable was deprecated in Proxmox VE 6, in favor ofTARGET. In Proxmox VE 7, it has been removed entirely and thus, it is not exported to the hookscript anymore. - The

sizeparameter ofvzdumphas been deprecated, and setting it is now an error.

- Hookscript: The

-

API deprecations, moves and removals

- The

upgradeparameter of the/nodes/{node}/(spiceshell|vncshell|termproxy)API method has been replaced by providingupgradeascmdparameter. - The

/nodes/{node}/cpuAPI method has been moved to/nodes/{node}/capabilities/qemu/cpu - The

/nodes/{node}/ceph/disksAPI method has been replaced by/nodes/{node}/disks/list - The

/nodes/{node}/ceph/flagsAPI method has been moved to/cluster/ceph/flags - The

db_sizeandwal_sizeparameters of the/nodes/{node}/ceph/osdAPI method have been renamed todb_dev_sizeandwal_dev_sizerespectively. - The

/nodes/<node>/scan/usbAPI method has been moved to/nodes/<node>/hardware/usb

- The

-

CIFS credentials have been stored in the namespaced

/etc/pve/priv/storage/<storage>.pw instead of/etc/pve/<storage>.cred since Proxmox VE 6.2 - existing credentials will get moved during the upgrade allowing you to drop fallback code. -

The external storage plugin mechanism had a ABI-version bump that reset the ABI-age, thus marking an incompatible breaking change, that external plugins must adapt before being able to get loaded again.

-

qm|pct status <VMID>--verbose, and the respective status API call, only include the template line if the guest is atemplate, instead of outputtingtemplate:for guests which are not templates.

Known Issues

-

Network: Due to the updated systemd version, and for most upgrades, the newer kernel version (5.4 to 5.11), some network interfaces might change upon reboot:

-

Some may change their name. For example, due to newly supported functions, a change from

enp33s0f0toenp33s0f0np0could occur.We observed such changes with high-speed Mellanox models.

-

Bridge MAC address selection has changed in Debian Bullseye - it is now generated based on the interface name and the

machine-id (5)of the system.Systems installed using the Proxmox VE 4.0 to 5.4 ISO may have a non-unique machine-id. These systems will have their machine-id re-generated automatically on upgrade, to avoid a potentially duplicated bridge MAC.

-

If you do the upgrade remotely, make sure you have a backup method of connecting to the host (for example, IPMI/iKVM, tiny-pilot, another network accessible by a cluster node, or physical access), in case the network used for SSH access becomes unreachable, due to the network failing to come up after a reboot.

- Container:

- cgroupv2 support by the container’s OS is needed to run in a pure cgroupv2 environment. Containers running systemd version 231 or newer support cgroupv2 [1], as do containers that do not use systemd as init system in the first place (e.g., Alpine Linux or Devuan). CentOS 7 and Ubuntu 16.10 are two prominent examples for Linux distributions releases, which have a systemd version that is too old to run in a cgroupv2 environment, for details and possible fixes see:https://pve.proxmox.com/pve-docs/chapter-pct.html#pct_cgroup_compat

6版更新到7版

如何更新到 7 版,官方有建議先更新到 6 版最新版次之後再進行更新到 7 版,目前 6 版最新版次為 6.4-13

在做更新之前,請先確認好您的環境下的 LXC 模式系統是否有 CentOS7及Ubuntu 16.10 這兩套舊系統,如果有請先不要做更新事宜,因為在 PVE 7.0 原本是 cgroupv1 現在更新為 cgroupv2 這個版本不支援剛剛說明那兩套系統服務,一但更新上去的話會無法讓系統正常運行。

- 更新 7 版指令

apt update && apt dist-upgrade -y

更新完建議重開機

reboot -nf

- 先下「

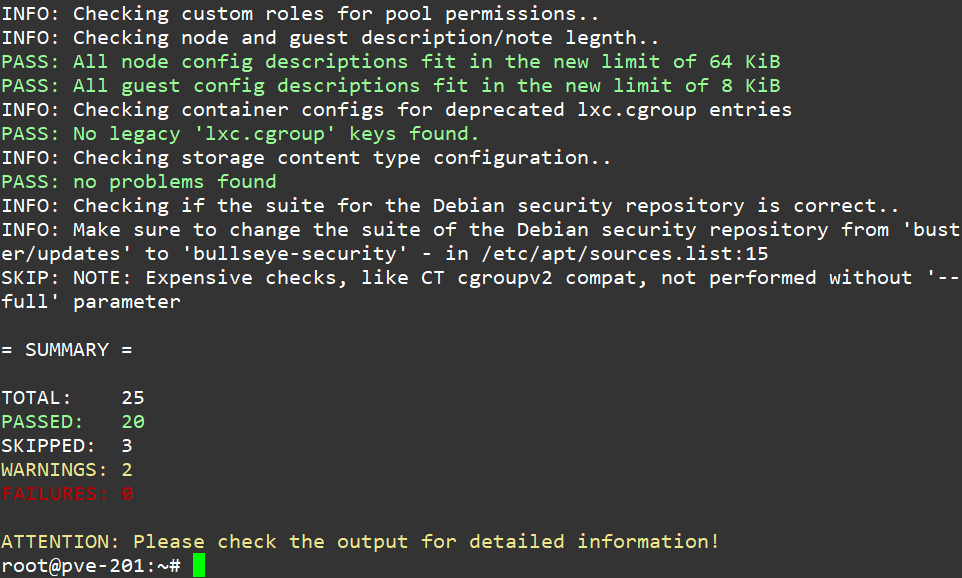

pve6to7」檢清更新的清單,執行結果如下

pve6to7

- 啟用所有檢查的情況

pve6to7 --full

- 更新來源庫

apt update

apt dist-upgrade

- 修改

sources.list內容把Debian來源庫更新到Bullseye

sed -i 's/buster\/updates/bullseye-security/g;s/buster/bullseye/g' /etc/apt/sources.list

- 修改訂閱版來源庫

pve-enterprise.list,沒有在使用訂閱版來源庫記得要進去該設定檔註解

sed -i -e 's/buster/bullseye/g' /etc/apt/sources.list.d/pve-install-repo.list

- 修改 Ceph 來源庫

echo "deb http://download.proxmox.com/debian/ceph-octopus bullseye main" > /etc/apt/sources.list.d/ceph.list

更新到 7 版

- 以上流程跑完之後就可以開始更新到 7 版

apt update && apt dist-upgrade -y

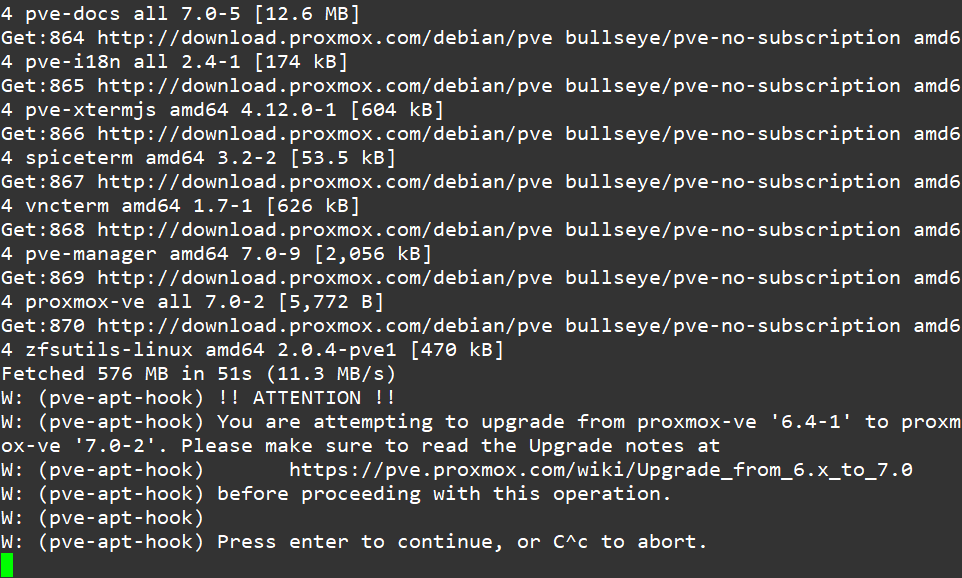

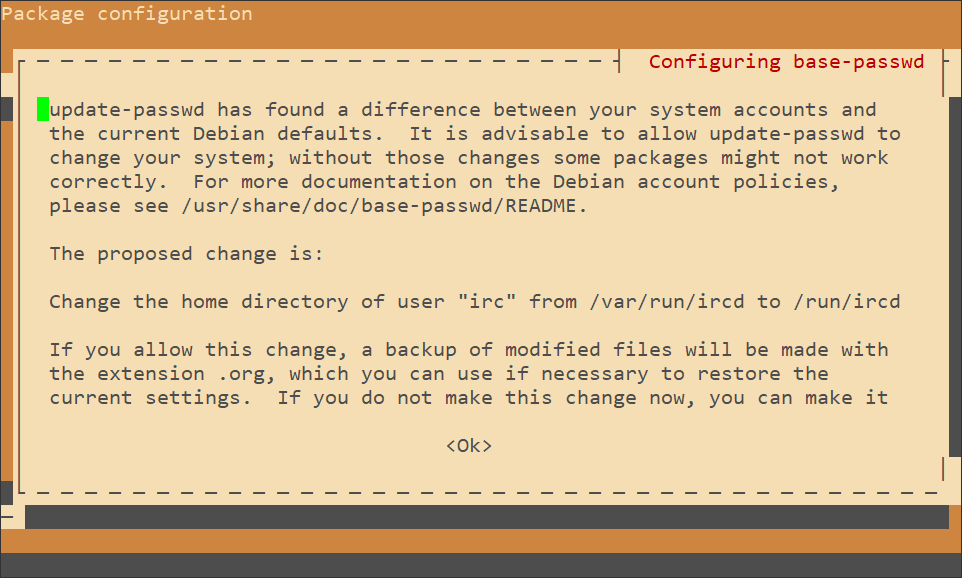

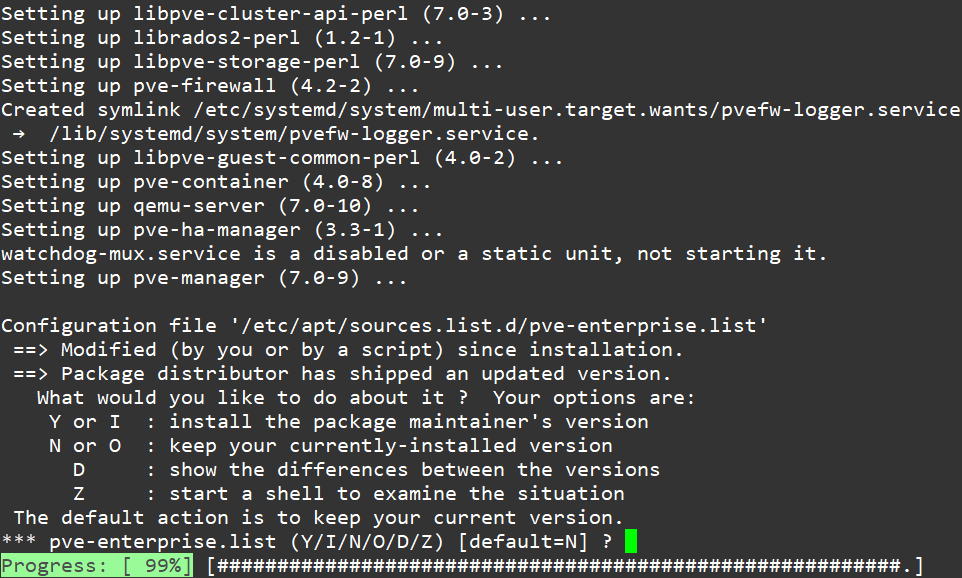

- 跑到一半會出現此訊息,只要按下 enter 繼續更新

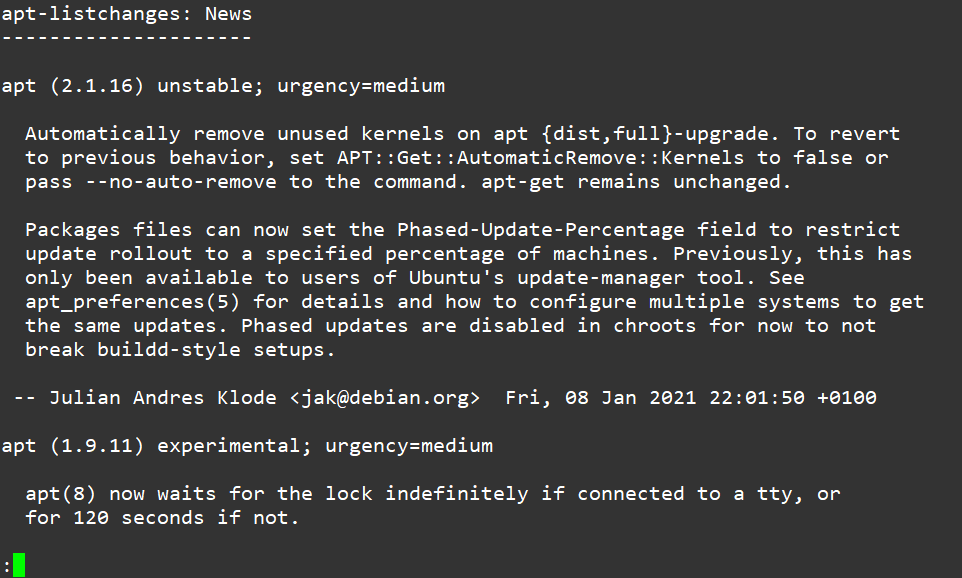

- 執行到一半會出新要更新的清單,給您看只要

enter到最下面之後按下q離開就會繼續更新。

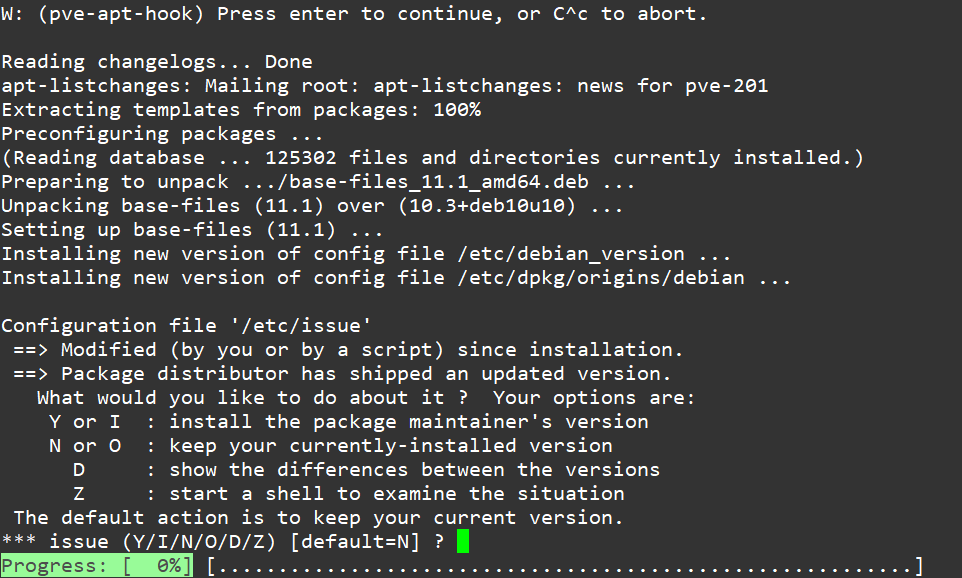

- 更新詢問訊息,只要預設就可以了直接按下

Enter鍵即可

- 直接按

OK

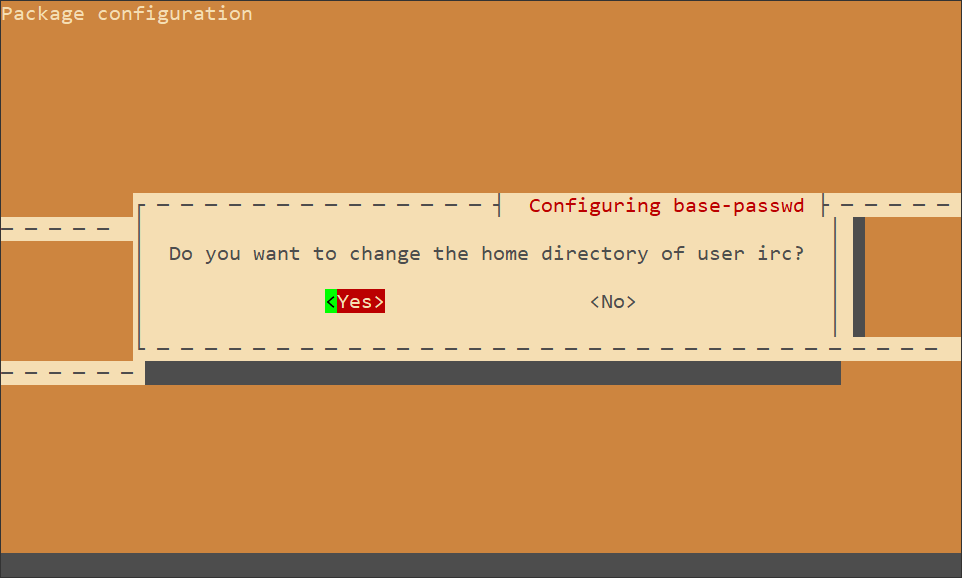

- 選擇

YES

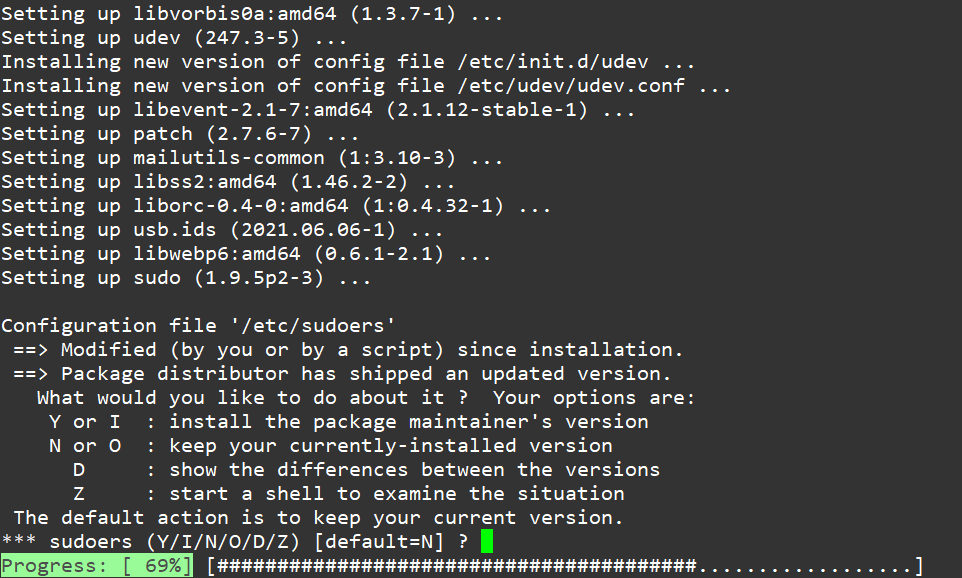

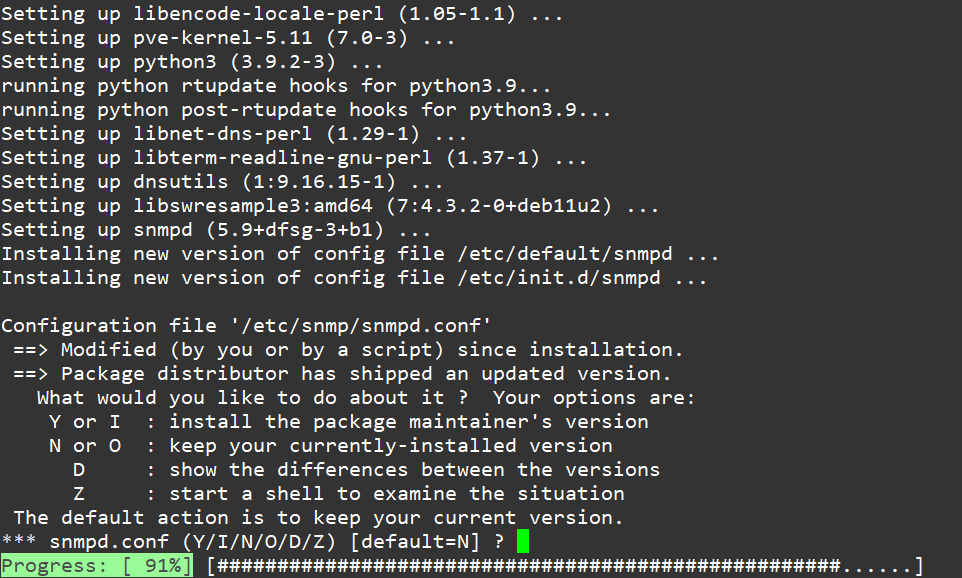

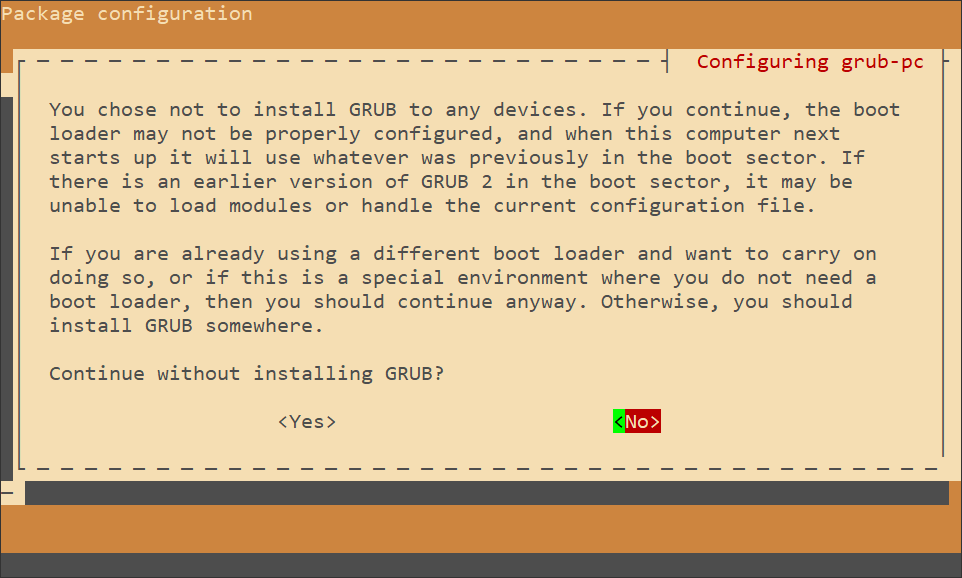

- 預設

N

- 預設

N

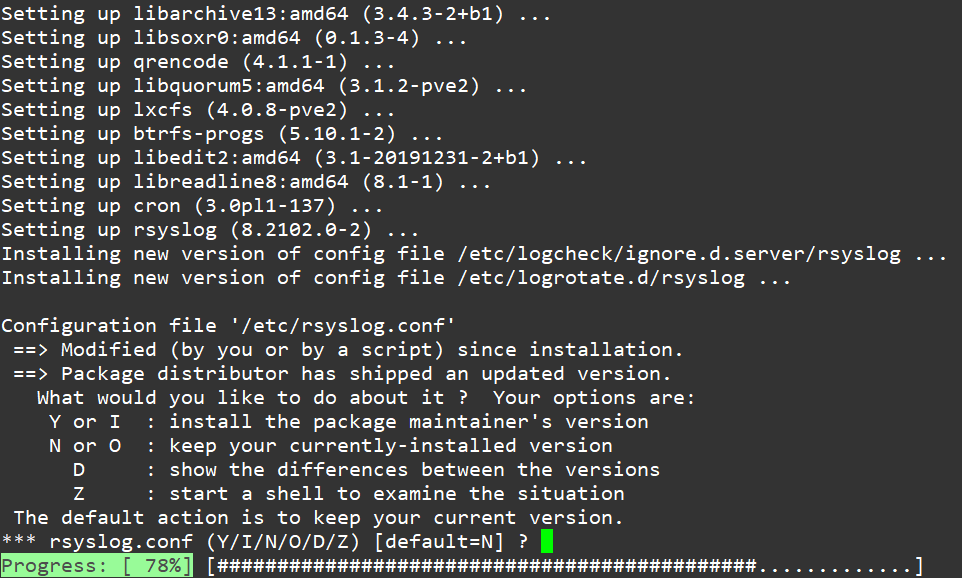

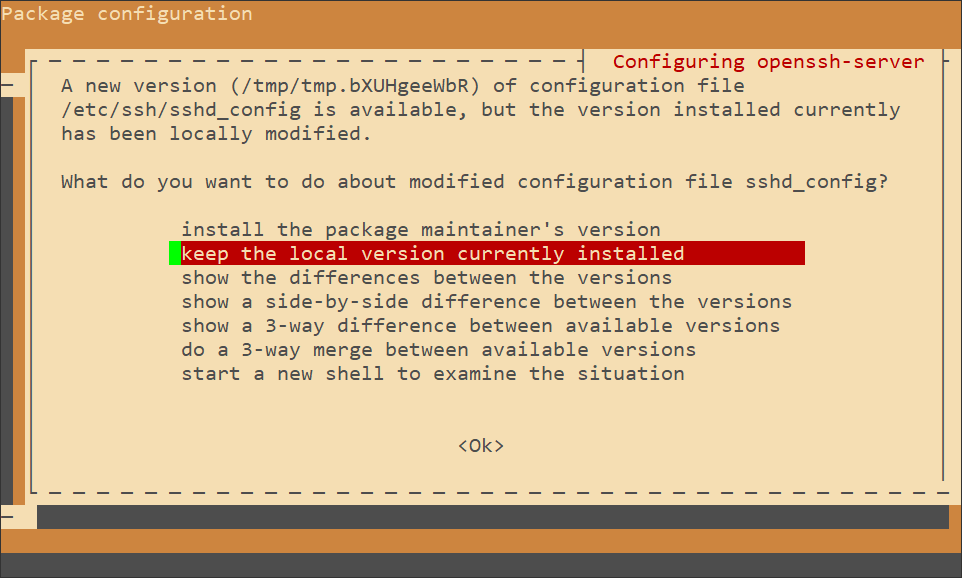

- 預設

keep the local version currently installed就可

- 預設

N

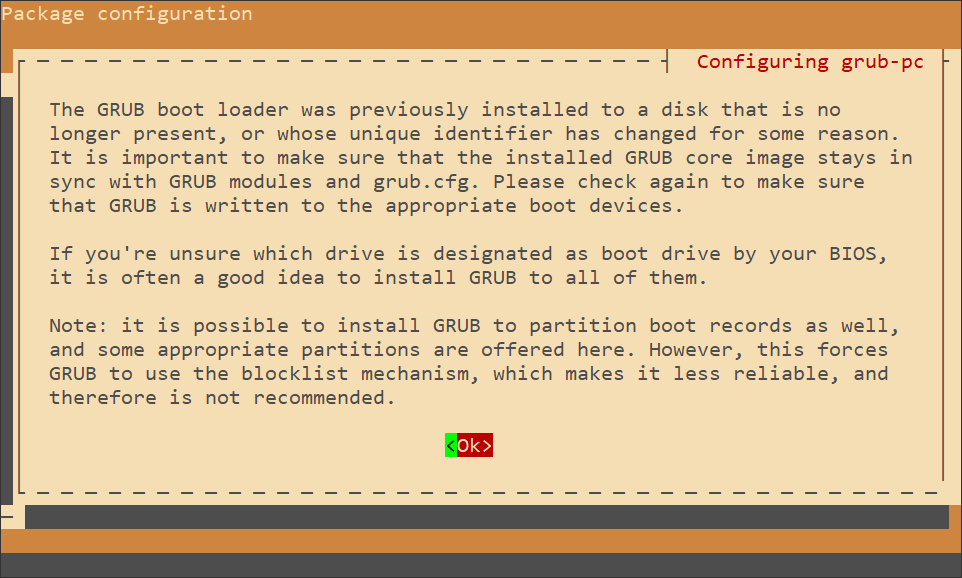

- 按

OK

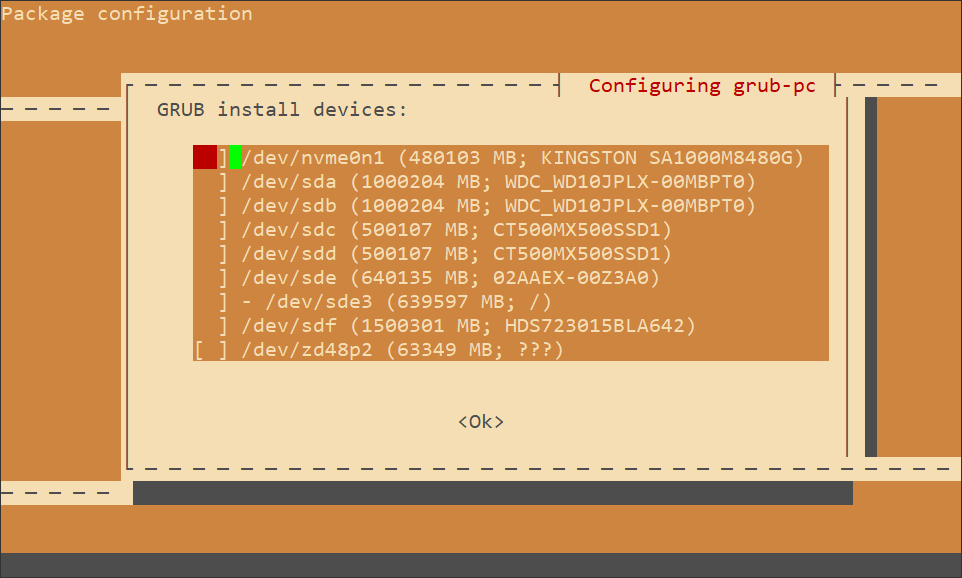

- 選擇

grub安裝路徑,選擇您所安裝的硬碟就可以了

- 選擇

Yes

- 預設

N