Proxmox VE 無法正常複寫

BUBU 因為公司的 PVE 系統配置有問題有重灌 PVE 系統,此環境是在有叢集的狀況下所發生無法複寫以下是解決方式。

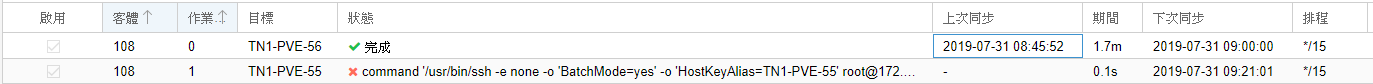

狀況1如下:

2019-07-31 08:52:34 108-1: start replication job

2019-07-31 08:52:34 108-1: guest => VM 108, running => 13297

2019-07-31 08:52:34 108-1: volumes => hdd1-zfs:vm-108-disk-0

2019-07-31 08:52:34 108-1: (remote_prepare_local_job) Host key verification failed.

2019-07-31 08:52:34 108-1: (remote_prepare_local_job)

2019-07-31 08:52:34 108-1: end replication job with error: command '/usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TN1-PVE-55' [email protected] -- pvesr prepare-local-job 108-1 hdd1-zfs:vm-108-disk-0 --last_sync 0' failed: exit code 255從畫面上所顯示的訊息

會造成這樣子的原因是因為 SSH 的憑證有問題所造成無法正常複寫

解決方式如下:

用 SSH 進去該台 PVE 主機下以下的指令

pvesr run --id 108-1 --verbose # 108-1 修改成換排程上有問題的編號 108 指 Guest、-1 指 Job執行結果如下,跟從 WEB UI 上的 log,所顯示的記錄是一樣的

start replication job

guest => VM 108, running => 13297

volumes => hdd1-zfs:vm-108-disk-0

(remote_prepare_local_job) Host key verification failed.

(remote_prepare_local_job)

end replication job with error: command '/usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TN1-PVE-55' [email protected] -- pvesr prepare-local-job 108-1 hdd1-zfs:vm-108-disk-0 --last_sync 0' failed: exit code 255

command '/usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TN1-PVE-55' [email protected] -- pvesr prepare-local-job 108-1 hdd1-zfs:vm-108-disk-0 --last_sync 0' failed: exit code 255確認完問題點,再執行下面執令

/usr/bin/ssh -e none -o 'HostKeyAlias=TN1-PVE-55' [email protected] /bin/true執行完的畫面,這裡按下 yes 即可

The authenticity of host 'tn1-pve-55 (172.16.1.55)' can't be established.

ECDSA key fingerprint is SHA256:V1r8PubFmshlEiDgQ/XsDepfn+/0LyRZT25efLSZBuM.

Are you sure you want to continue connecting (yes/no)?yes 完的訊息

Warning: Permanently added 'tn1-pve-55' (ECDSA) to the list of known hosts.然後再執行一次,看是否有正常在同步了

pvesr run --id 108-1 --verbose

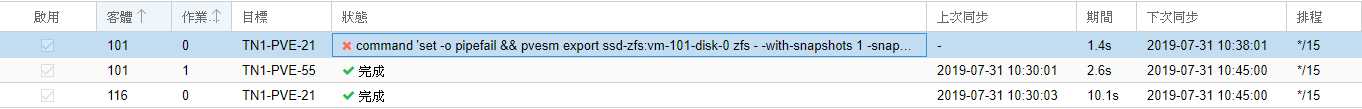

狀況2如下:

2019-07-31 10:08:01 101-0: start replication job

2019-07-31 10:08:01 101-0: guest => VM 101, running => 13819

2019-07-31 10:08:01 101-0: volumes => ssd-zfs:vm-101-disk-0

2019-07-31 10:08:01 101-0: freeze guest filesystem

2019-07-31 10:08:01 101-0: create snapshot '__replicate_101-0_1564538881__' on ssd-zfs:vm-101-disk-0

2019-07-31 10:08:01 101-0: thaw guest filesystem

2019-07-31 10:08:02 101-0: full sync 'ssd-zfs:vm-101-disk-0' (__replicate_101-0_1564538881__)

2019-07-31 10:08:02 101-0: full send of ssd-rpool/vm-101-disk-0@__replicate_101-2_1564413301__ estimated size is 30.5G

2019-07-31 10:08:02 101-0: send from @__replicate_101-2_1564413301__ to ssd-rpool/vm-101-disk-0@__replicate_101-3_1564470016__ estimated size is 307M

2019-07-31 10:08:02 101-0: send from @__replicate_101-3_1564470016__ to ssd-rpool/vm-101-disk-0@__replicate_101-1_1564538401__ estimated size is 349M

2019-07-31 10:08:02 101-0: send from @__replicate_101-1_1564538401__ to ssd-rpool/vm-101-disk-0@__replicate_101-0_1564538881__ estimated size is 46.7M

2019-07-31 10:08:02 101-0: total estimated size is 31.1G

2019-07-31 10:08:02 101-0: TIME SENT SNAPSHOT

2019-07-31 10:08:02 101-0: ssd-rpool/vm-101-disk-0 name ssd-rpool/vm-101-disk-0 -

2019-07-31 10:08:02 101-0: volume 'ssd-rpool/vm-101-disk-0' already exists

2019-07-31 10:08:02 101-0: warning: cannot send 'ssd-rpool/vm-101-disk-0@__replicate_101-2_1564413301__': signal received

2019-07-31 10:08:02 101-0: TIME SENT SNAPSHOT

2019-07-31 10:08:02 101-0: warning: cannot send 'ssd-rpool/vm-101-disk-0@__replicate_101-3_1564470016__': Broken pipe

2019-07-31 10:08:02 101-0: warning: cannot send 'ssd-rpool/vm-101-disk-0@__replicate_101-1_1564538401__': Broken pipe

2019-07-31 10:08:02 101-0: warning: cannot send 'ssd-rpool/vm-101-disk-0@__replicate_101-0_1564538881__': Broken pipe

2019-07-31 10:08:02 101-0: cannot send 'ssd-rpool/vm-101-disk-0': I/O error

2019-07-31 10:08:02 101-0: command 'zfs send -Rpv -- ssd-rpool/vm-101-disk-0@__replicate_101-0_1564538881__' failed: exit code 1

2019-07-31 10:08:02 101-0: delete previous replication snapshot '__replicate_101-0_1564538881__' on ssd-zfs:vm-101-disk-0

2019-07-31 10:08:02 101-0: end replication job with error: command 'set -o pipefail && pvesm export ssd-zfs:vm-101-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_101-0_1564538881__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TN1-PVE-21' [email protected] -- pvesm import ssd-zfs:vm-101-disk-0 zfs - -with-snapshots 1' failed: exit code 255從畫面上所顯示的訊息

目標源上的已經之前同步過的資料,可能是因為資料差異有問題,所造成此問題

解決方式如下:

先檢查 來源 及 目標 上的屬於該 VM 快照先列出來查看

來源

source host: zfs list -t snapshot | grep 101 # 101 修改成所要查詢 VM 的代號

目標

target host: zfs list -t snapshot | grep 101 # 101 修改成所要查詢 VM 的代號來源查詢結果

root@TN1-PVE-45:~# zfs list -t snapshot | grep 101

ssd-rpool/vm-101-disk-0@__replicate_101-2_1564413301__ 207M - 18.1G -

ssd-rpool/vm-101-disk-0@__replicate_101-3_1564470016__ 155M - 18.1G -

ssd-rpool/vm-101-disk-0@__replicate_101-1_1564540201__ 34.9M - 18.1G -目標查詢結果

root@TN1-PVE-21:~# zfs list -t snapshot | grep 101

ssd-rpool/vm-101-disk-0@cert_renew01 6.20G - 17.5G -

ssd-rpool/vm-101-disk-0@__replicate_101-2_1564413301__ 2.50M - 18.1G -

ssd-rpool/vm-101-disk-0@__replicate_101-1_1564413304__ 2.50M - 18.1G -

ssd-rpool/vm-101-disk-0@__replicate_101-3_1564470016__ 117M - 18.1G -

ssd-rpool/vm-101-disk-0@__replicate_101-0_1564477321__ 0B - 18.1G -兩者差異很大,所以只要將目標上有關於此 VM 的資料清除掉之後就可以正常執行了

先查詢該 VM 目前存放路徑

zfs list | grep 101查詢完之後再清除掉該 VM 相關的資料

zfs destroy ssd-rpool/vm-101-disk-0執行結果如下,出現此訊息是因為該 VM 檔還帶有快照檔,所以還要再清除掉這些快照檔

cannot destroy 'ssd-rpool/vm-101-disk-0': volume has children

use '-r' to destroy the following datasets:

ssd-rpool/vm-101-disk-0@cert_renew01

ssd-rpool/vm-101-disk-0@__replicate_101-3_1564470016__

ssd-rpool/vm-101-disk-0@__replicate_101-2_1564413301__

ssd-rpool/vm-101-disk-0@__replicate_101-0_1564477321__

ssd-rpool/vm-101-disk-0@__replicate_101-1_1564413304__如何清除掉快照檔,在指令後面加上 -r 就可以了

zfs destroy ssd-rpool/vm-101-disk-0 -r清除之後再去來源主機那裡重新下一次指令測試看看是否會成功

pvesr run --id 101-0 --verbose